Let It Crash — Turning Failure into Your Most Reliable Signal

Thirteen failed releases reminded me that embracing errors beats prediction. Discover how the Erlang "let it crash" mindset rescued my CI/CD pipeline and the one-line Bash fix that did it.

The Story: Thirteen Failures, One Flashback

I shipped thirteen broken releases before I remembered the one-line fix.

Each failure looked different - misleading CLI output, race conditions, unmet dependencies, but the same fix: a new layer of Bash gymnastics glued on top of the old one.

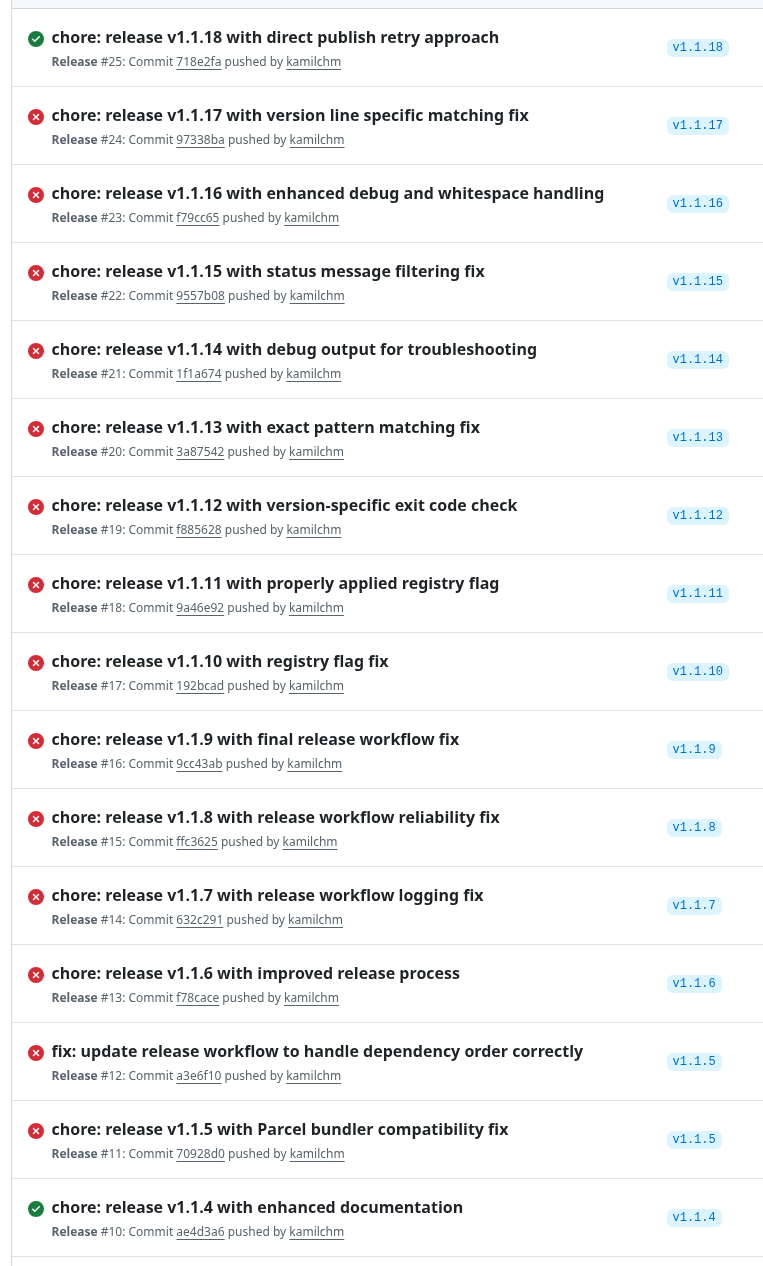

The trail of thirteen failed releases (v1.1.5-v1.1.17) before the simple solution worked

By version 1.1.17 my release script looked over-engineered: a multi-line retry loop packed with debug echos, four separate grep/sed filters, and a blind 10-second sleep between attempts. Nothing worked.

Version 1.1.18 replaced complex spaghetti bash with just one command execution in a simple retry loop:

# Simple retry loop — succeeds when the dependency appears.

for i in {1..12}; do

cargo publish -p zod_gen_derive && break || sleep 10

done

It passed on the first run.

The Setup

I maintain two Rust crates: zod_gen and zod_gen_derive. The second depends on the first, so a release needs to:

- Publish

zod_gen. - Wait until crates.io accepts it.

- Publish

zod_gen_derive.

Where It Went Wrong

I paired with an AI agent to automate the sequence. Here's where things went sideways: the AI kept proposing reasonable-sounding incremental fixes. "Let's add the --registry flag." "Maybe we need better pattern matching." "How about more debug output?" Each suggestion looked logical in isolation, so I kept saying yes.

This created a dangerous feedback loop. I wasn't thinking about the problem the way I would if coding manually—I was just reviewing and approving AI suggestions. The script ballooned through versions 1.1.8 → 1.1.17, adding:

cargo search,cargo info --registry, and half-dozen flags.- Three layers of regex filters plus debug logging.

- Sleep statements tuned by guesswork.

The irony? I'd preached "let it crash" for years, but I thought this was a trivial script we could create in minutes and forget about it. I completely off-loaded the thinking to my AI assistant for this "small thing." The AI's incremental "improvements" kept me in a rabbit hole I would never have entered manually.

Result? A trail of tags on crates.io—and zero successful dependent publishes.

Only after thirteen failures did the old Erlang lesson resurface.

The Principle: Failure as Signal, Not Enemy

Why Engineers Avoid Failure

Engineers hate red. We instinctively add checks to avoid the red X before it appears. That reflex feels safe, yet it breeds hidden costs:

- Complexity: Each avoidance layer creates more states to debug.

- Brittleness: Tools change output; your regex doesn't.

- Time drain: You debug the detection logic and the real problem.

Red flags that signal "prediction gone wild":

- "Let's just parse the CLI output first …"

- Multiple health-check endpoints before every API call.

- Deeply nested

if/elsechains sprinkled with sleeps.

If you spot those, you're predicting instead of observing.

The Erlang Alternative

The Erlang/OTP runtime treats process failure as routine. A crashed process restarts under a supervisor; the system stays healthy. Joe Armstrong's mantra: "Let it crash."

For the technical details: Fred Hébert's "The Zen of Erlang" explains beautifully how Erlang's supervision trees and error handling work in practice.

Translate that philosophy to automation:

- Attempt the operation now.

- If it fails and the failure is recoverable, wait and retry.

- Success ends the loop.

Why it works universally:

A genuine failure is the most accurate readiness probe you will ever find.

- Fewer moving parts → fewer bugs.

- Self-correcting: The loop ends itself when conditions are right.

- Clear feedback: The error message tells you exactly what is missing.

The Working Implementation

Here's the final Bash snippet that finally shipped zod_gen_derive:

for i in {1..12}; do # 2 minutes total

if cargo publish -p zod_gen_derive; then

echo "zod_gen_derive published successfully!"

exit 0

else

echo "Dependency not ready—retrying in 10s…"

sleep 10

fi

done

echo "ERROR: Failed to publish zod_gen_derive after 12 attempts"

exit 1

Key details:

- Retry budget prevents infinite loops.

- Immediate feedback (

cargo publish's own error) shows why it failed. - No parsing—we trust the tool to tell the truth.

Broader Applications: When to Let It Crash

The Decision Framework

Before building prediction logic, ask yourself:

- Am I trying to predict whether an operation will succeed?

- Is the failure recoverable and informative?

- Would the failure tell me exactly what I'm trying to detect?

If yes to all three → let the operation fail and retry.

The pattern scales from shell scripts to distributed systems—failure as signal works at every level.

Prompting Your AI Assistant to "Let It Crash"

LLMs seem to default to "failure avoidance" patterns. The "let it crash" philosophy is probably too niche for their general training—most programming tutorials teach elaborate error checking, not embracing failure as signal.

This means we need to explicitly guide them with prompts. Here are my initial attempts (still experimenting):

If the operation can fail safely, propose a retry loop instead of a readiness check.

Prefer capturing and reacting to the real error message over predicting success.

Show me a version that removes parsing and relies on direct retries (let-it-crash style).

Full disclosure: These prompts are untested. I just learned this lesson myself and will be refining them as I inevitably get kicked again by "avoid failure" thinking. Consider them starting points, not proven solutions.

Conclusion

Thirteen failed attempts tried to avoid failure; one succeeded by trusting it. The core lesson: failure itself is often the most reliable signal you can get. Instead of building elaborate prediction mechanisms, let the operation tell you when it's ready by trying it directly.

The AI collaboration angle was just how I ended up forgetting this principle—but the principle itself is universal and timeless.

Next time you automate a workflow, start with a naive attempt, watch it crash, and wrap it in a controlled retry. Your future self (and your CI budget) will thank you.